Decentralized DevOps and Cloud Automation

Innovate and deliver faster than the competition

Introduction to Decentralized DevOps

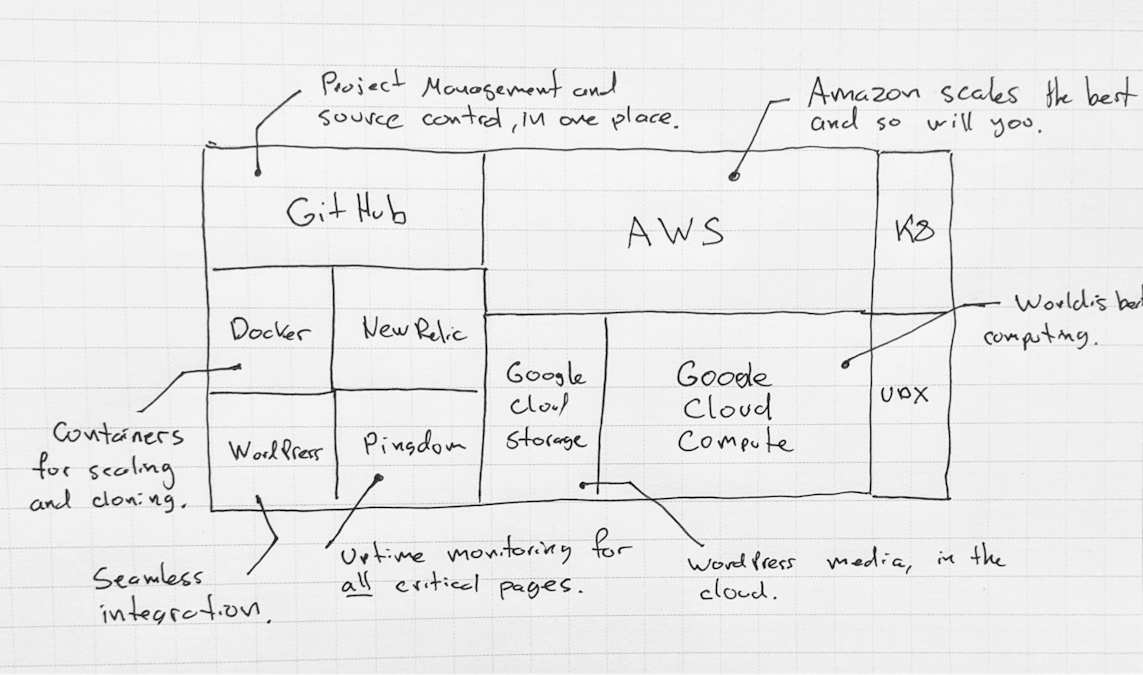

In recent years, centralized development operations (DevOps) models have given way to more decentralized ones partly due to the rise of cloud computing and container use in software development.

With cloud-based services, it is easier for developers to work on codebases from anywhere. In addition, containers allow for greater flexibility and agility in the software development process. As a result, decentralized DevOps models are becoming increasingly popular.

Put simply, decentralization permits for specialized teams and professionals with different expertise to work cohesively together as part of a greater process.

While decentralization has benefits, it is important to consider the trade-offs carefully before adopting this approach. One potential downside is that it can be more difficult to coordinate and manage a decentralized team.

However, if done correctly, decentralized DevOps can provide numerous advantages, including greater flexibility, agility, and freedom for developers.

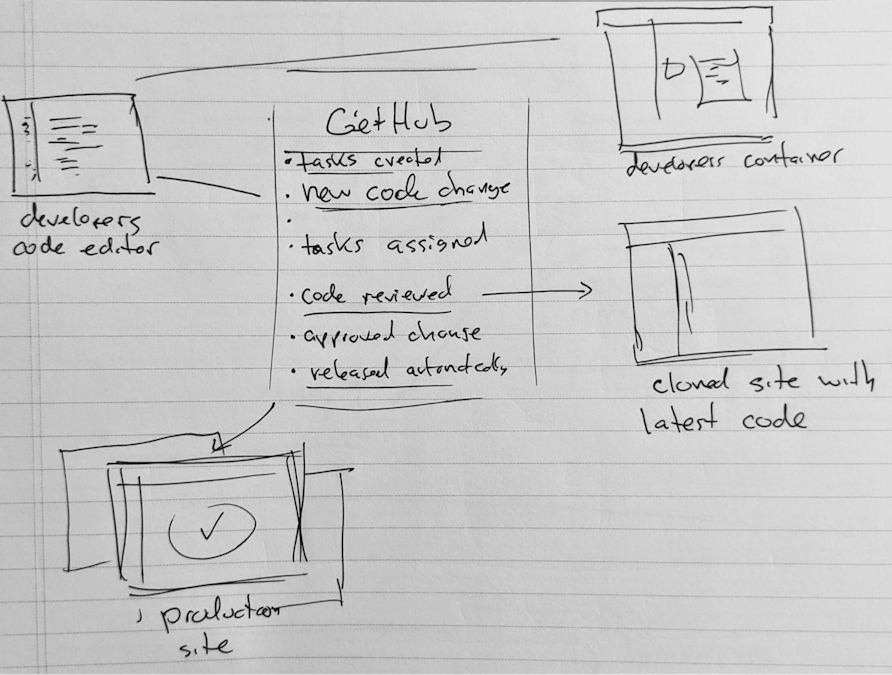

Using decentralized DevOps allows developers to work independently while still being part of a team. This helps speed up the development process. Source control ensures that all code is tracked and versioned, making it easier to roll back changes if necessary.

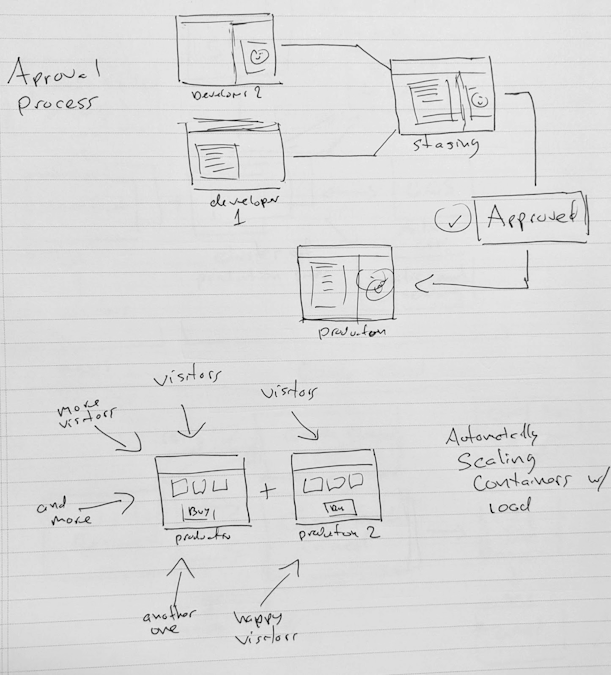

The ability to have a final say over how the solution's cloud-native delivery is a key advantage of using a control gate in a pipeline automation. This helps ensure businesses maintain quality standards and avoid potential problems with their cloud-based solutions.

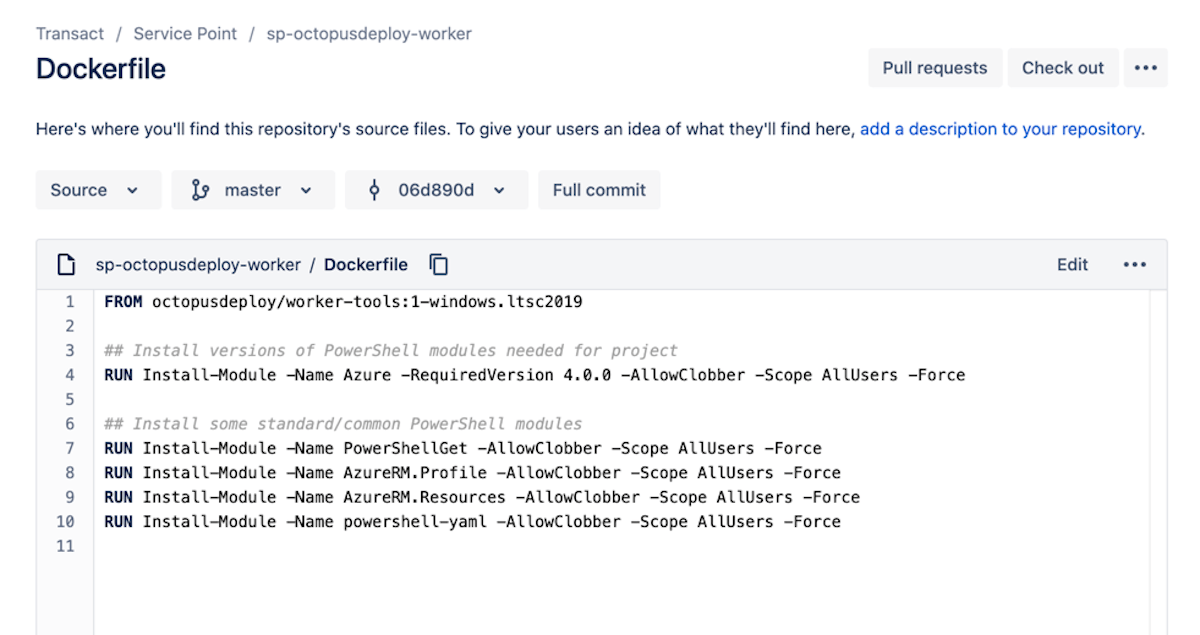

Containerization of Workflow

In the software development industry, it is commonplace to use automation pipelines in order to facilitate the release and deployment process.

However, these automation pipelines are often executed on-premises, which can lead to issues regarding tooling and configuration. Introducing Container Workers: the next generation of cloud virtual machines for release and deployment tasks.

With Container Workers, you can execute Octopus Deploy, GitHub Actions, Azure DevOps, and Bitbucket Pipeline tasks on-premise with the exact tooling and configuration you need.

Container Workers are defined by the tooling software they need, which is stored in a container registry. This allows for different tooling versions and configurations to be decoupled from the host machines.

And because the environment in which release/deploy scripts are executed matters, using a Docker container created by the product development team gives you full control over the exact environment. In addition, Container Workers provide an abstraction layer between the automation pipeline and the cloud compute service, making it easy to scale up or down as needed without having to reconfigure the automation pipeline itself.

To re-platform existing infrastructure for cloud-native development, you need to first assess your current infrastructure and identify which components need to be replaced or updated. You also need to consider how the new infrastructure will fit into the overall development process.

To start such a project, you need to first gather all the necessary requirements and then create a plan of action. This plan should include who will be responsible for each stage of the project and what resources are required.

Once the plan is in place, you can then start building the pipeline components.

Release Controls and Compliance

Deployments can be automated in a variety of ways, depending on the tools and technologies that are used. In some cases, deployments may be triggered automatically when new code is checked into a source control repository.

In other cases, deployments may be initiated manually by clicking a button or typing a command.

Regardless of how they are initiated, automated deployments typically involve the following steps:

Automated deployments can help to speed up the process of getting code changes into production and can help to ensure that all code changes comply with company policies.

In the cloud era, companies are under immense pressure to release new features and functionality at a rapid pace. At its core, a deployment pipeline is a mechanism for automating the process of software delivery.

By automating repetitive tasks and eliminating manual processes, a deployment pipeline helps to improve the frequency and quality of software delivery. A deployment pipeline can help to meet this demand by increasing the rate of deployments. In addition, a deployment pipeline can also help to improve the quality of software by allowing for more extensive testing before each release.

As a result, a deployment pipeline is an essential tool for any company that wants to stay competitive in the cloud era.

Without this automation, it becomes impossible to increase the rate of deployments.

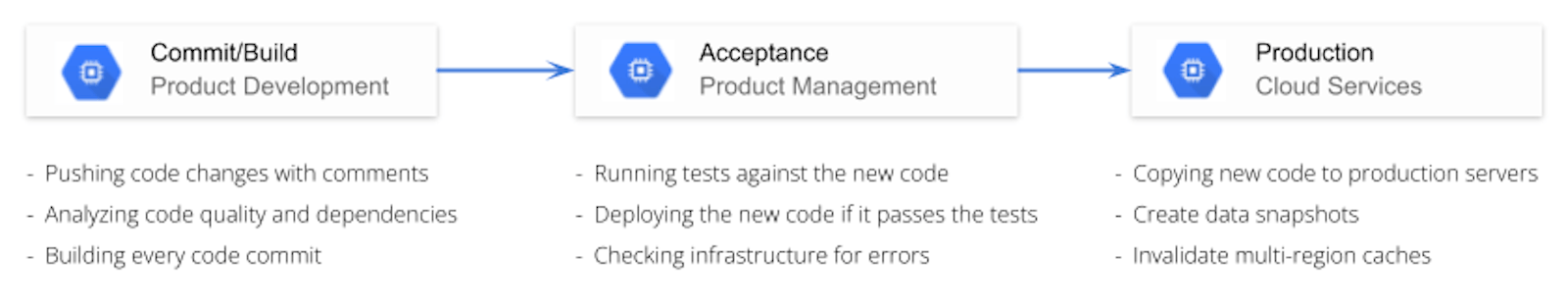

Commit/Build: High-performing teams have developers who commit their changes to the main branch several times a day. The build management system should automatically build and test the new version of the software. Once complete, the build management system should send a package with the new version to the artifact repository.

Acceptance: Acceptance testing should include functional business tests and quality attribute tests, like security and performance. Depending on the product and user or customer expectations, acceptance may extend to an external party doing their testing as part of your pipeline. This ensures that the product meets the expected standards and is ready for release.

Production: Continuous Delivery requires that software is always ready to deploy. You might deploy every change as soon as it's ready or regularly deploy the latest available version.

We can introduce separate stages to handle complex scenarios. For example, you could add component and system performance testing in different stages.

The Ideal Cloud-Native Pipeline Solution

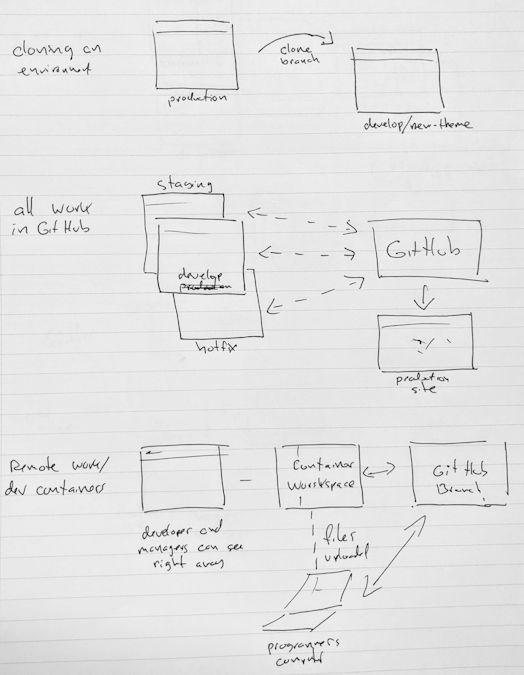

The ideal cloud-native pipeline solution would be one that has control gates throughout the entire process, from development to production. This would allow for a more streamlined and efficient workflow, as well as greater transparency and accountability.

For example, you can have a gate where software is built and tested in a staging environment, then automatically promoted to production if it passes all tests. This helps ensure that only high-quality software reaches customers.

The control gate should be implemented in a software factory, which is an automated build and release process that can be used to produce software products. The software factory should include a pipeline automation system, which is a system that automates the execution of tasks in a pipeline. The cloud-native platform should be used to host the control gate, software factory, and pipeline automation system.

Using Pull Requests (PRs) is an efficient way to integrate subject-matter experts (SME) into your process. PRs allow for automated testing and continuous integration (CI), which saves time and ensures quality. Cloud-based DevOps tools make it easy for SMEs to work anywhere and integrate seamlessly into your process. PRs are an essential part of DevOps and can help your team work more efficiently.

Compliance checks within Pull Requests can help to ensure that all code changes comply with company policies before they are deployed to production. By automating the process of deploying code changes, developers can focus on writing code, rather than on manual tasks. This helps to improve the quality of the codebase and reduces the likelihood of errors. In addition, automated deployments allow for more frequent releases, which can help to speed up the pace of innovation.

Re-Platforming Your Cloud Compute Infrastructure for Delivery

The pipeline should be built by a team of developers who are well-versed in cloud-native technologies. They should also have a good understanding of the business requirements and how the pipeline will fit into the overall development process.

The pipeline should be maintained by a team of DevOps specialists who are responsible for keeping it up to date and ensuring that it meets all the necessary requirements.

There are major benefits to using code to define your pipeline and infrastructure:.

Infrastructure-as-Code: The practice of writing the configuration of your infrastructure in a code format, such as YAML or JSON, that can be treated as software. The benefit is consistent infrastructure across all environments..

Pipeline-as-Code: uses source code to track changes to the pipeline process, allowing for pipeline development environments, rollback, and other benefits that using source code for software development brings.

Disaster Recovery: The process of ensuring that your infrastructure can be quickly recovered in the event of a disaster. This usually involves having a backup of your infrastructure and having a plan for quickly restoring it.

Automation of Continuous Delivery

Automation is not the goal of Continuous Delivery, but it is fundamental to achieving high-quality, high-frequency software deployments. As a result, companies that are able to successfully automate their software development and deployment processes are able to operate more efficiently and effectively.

The following Key Capabilities have a high correlation with successful operations:

Continuous integration is a process that helps avoid merge conflicts and allows developers to identify issues with new code changes quickly. By integrating code changes seamlessly into a codebase, it makes it easier for a development team to work together effectively. As a result, it speeds up the delivery of new features and bug fixes to customers.

Continuous testing is the process of automatically testing new code changes against an existing codebase in order to avoid introducing new bugs and improve overall code quality. By using continuous testing, developers can get feedback on their code changes faster and make necessary adjustments. Continuous testing is an important part of ensuring quality code regardless of project size.

Monitoring and observability is the key to maintaining a healthy and performant application. By monitoring key metrics and tracing requests as they flow through your system, you can identify issues quickly and prevent them from becoming bigger problems.

Loosely coupled architecture is an approach to software development where components are loosely interconnected. This allows individual components to be changed or scaled without affecting the others.

A data change management system is a collection of tools and processes used by an organization to track and control changes to data. A DCMS helps organizations ensure the accuracy and consistency of their data, and ensures that any changes are safely and efficiently integrated into their existing systems.

Process Change Management is a systematic approach to managing changes to business processes. It ensures that changes can be safely and efficiently integrated into an existing system, minimizing disruptions and maximizing benefits.

Deployment automation refers to the process of automating the deployment of software applications so that they can be deployed quickly and safely. This is often done through the use of scripts or other automated tools that help to streamline the process.

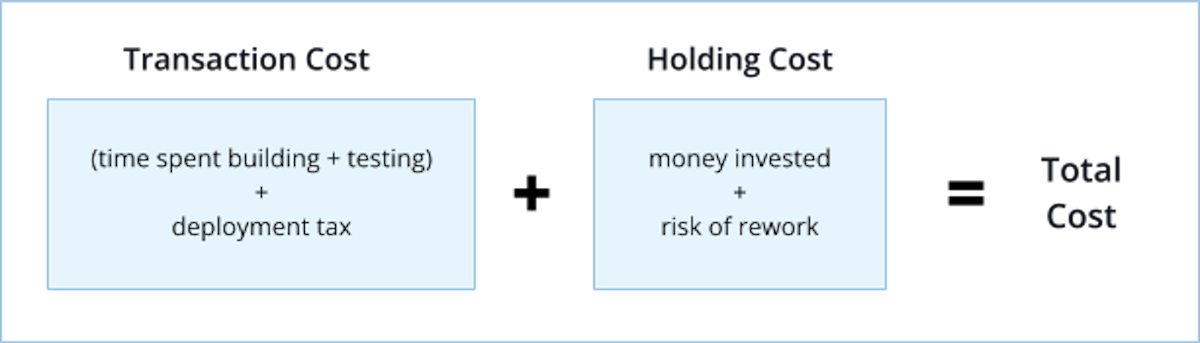

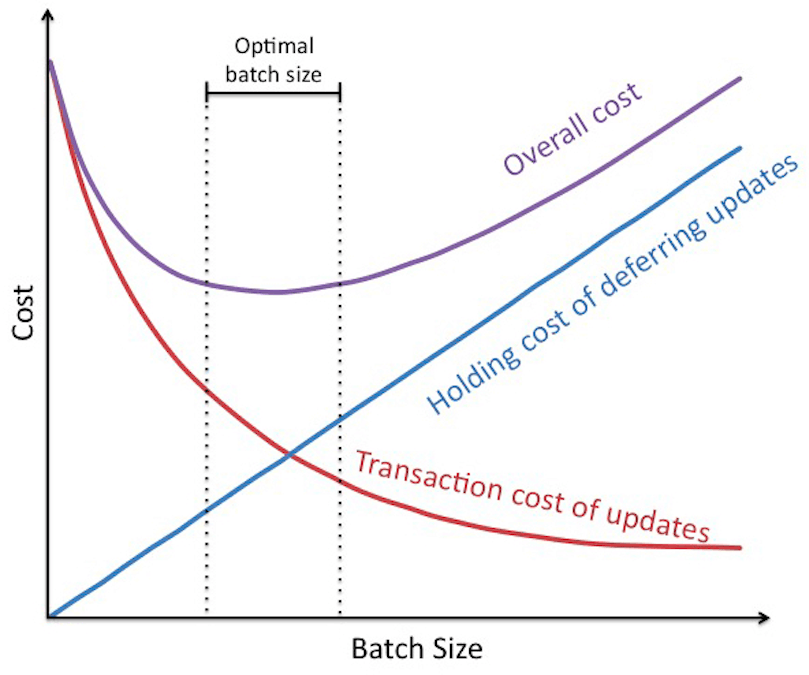

The Cost of Holding Software Deployments

Deployment of software can be expensive, especially if it is done frequently. In order to minimize the cost, some organizations may choose to hold on to the software for a period of time after a release is ready for deployment . This can lead to a combined cost of deploying and holding the software.

In the past teams would optimize transaction costs by calculating combined test duration or holding periods but this approach doesn't take into account other aspects like interval testing - which makes it harder on SREs who are already working long hours just due to eagerness over new features coming out every week.

Making one change at a time is better than making many changes at once. This way, the cost of each change is lower. If you make changes all at once, the cost goes up and the risk also goes up.

You can't prevent the cost of holding from increasing, but you can focus on reducing the transaction cost.

The deployment tax can be a significant expense for organizations, and it is important to consider this cost when making decisions about software deployments. Organizations should work to minimize the deployment tax as much as possible by optimizing their deployments and using automation where possible.

How CD Can Help Address Risk Aversion in Traditional Organizations

In a traditional organization, decision-making is centralized and risks are often siloed. This can lead to a risk-averse culture where innovation is stifled.

In contrast, a generative organization encourages decentralized decision-making and shares risks across the organization.

Something is generative when it can create something new or original. A generative idea produces new ideas, a generative process produces new outcomes, a generative relationship builds new capabilities in both partners, and a generative leadership style helps others see opportunity in their actions.

A generative organization is an organization that can create opportunities for itself, its members, its stakeholders and its community. Finally, a generative business creates opportunities for others while it creates value for itself.

This allows for more collaboration and creativity, as well as a greater willingness to experiment and learn from failure. Additionally, generative organizations introduce improvements after investigating systemic problems, rather than placing blame on individuals or not addressing the problem at all.

As a result, they are able to continuously improve their performance and better adapt to change. These cultural capabilities result in multiple positive enterprise-wide network effects. such as:

Common tools and methods allow for efficient and accurate communication across teams, which leads to better coordination and faster execution of tasks.

Teams share best practices, which leads to improved quality and consistency of work.

Clear responsibilities and goals result in individuals being able to focus on their tasks and avoid overlap or confusion.

Software is written to solve problems. Software that has been written, tested, and reviewed, and yet isn't in the hands of end users, isn't actually solving a problem.

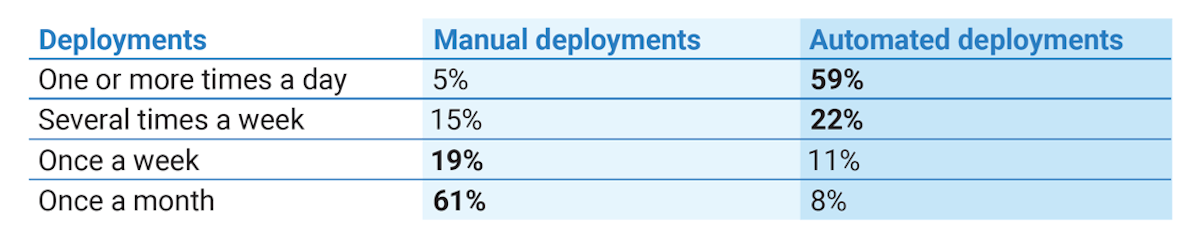

Without automation, teams are most likely to deploy once a month

With automation, most teams deploy software at least once a day

The most disappointing part about this result, however, isn't the wasted time spent deploying software manually. It's in the opportunity cost of features and bug fixes, which are otherwise complete, tested and ready to be used, stuck in the process waiting for next month's deployment when they could be solving real problems for end-users.

How to Automate Quality, Policy, and Innovation

Deployment automation solutions can vary in sophistication depending on the project. Our goal is to craft tools for people to drive quality, evolve policy and innovate with impunity by fielding automated deployment solutions that field the following capabilities:

Infrastructure-as-Code: Automates the process of provisioning and configuring servers, as well as automating common operations tasks such as software installation and updates.

Pipeline-as-Code: Automates the detection of differences in configuration between environments, and adjusts settings as needed to ensure that deployments are consistent across all environments.

Secrets: Protects sensitive information (such as passwords and API keys) by storing them in encrypted form, and ensuring that they are only accessible to authorized personnel.

Pipeline Visualization: Provides a central location where team members can view the status of all deployments and releases, including details on which servers have been deployed, what changes have been made, and when the release was completed.

Control Gates: provide real-time auditing of all deployment activity, including who initiated the deployment, what changes were made, and when the changes were applied. This allows team members to quickly identify any issues or problems with a release.

Monitoring and Alerting: solution for continuously monitoring the health of your cloud compute environment. With Monitoring and Alerting, you'll be able to automatically notify dev team members of any problems, so you can address them before they cause damage or downtime. Monitoring and Alerting is designed to be vendor-agnostic and multi-cloud. And because changes to alerts require Pull Requests, you can be sure that everyone on your team is always up to date.

Pull Request Intelligence: automatically review and report on code changes made by your team and other teams they work with. This makes it easy to identify potential problems and make data-driven decisions to improve your team's performance. Pull Request Intelligence is a must-have tool for any software development team looking to improve their workflow.

Work with teams to help them make changes more often. We do this by providing them with curated technical capabilities. If your organization doesn't have these properties, then it can be hard to introduce Continuous Delivery.

Measuring Automation Optimization

Cloud automation can save time and effort by automating tasks that would otherwise have to be done manually. Automation can also increase agility by allowing changes to be made quickly and easily.

There are a number of different metrics that can be used to track the benefits of cloud automation.

One common metric is the amount of time and effort that is saved by using automation tools. Another metric is the increase in agility that results from using cloud automation.

The Mean Time to Recover (MTTR) metric tracks the average time it takes to restore an application from a failed state, in minutes. This information helps correlate change activity to system stability, allowing dev teams to make changes that will improve the overall stability of their applications.

The code change volume metric measures the number of code changes a developer makes in a source control. This metric gives dev teams better visibility into the coding activities of their development teams. They can answer questions, such as who makes the most number of code changes and which repositories are the most active over time.

Deployment metrics help track the frequency and quality of continuous software releases to end users. They present data about application deployment, such as deployment state (failure or success) and frequency. This information can help organizations identify and fix any issues with their release process, ensuring that applications are deployed smoothly and consistently.

Build metrics help dev teams track the frequency and quality of their code build process. These metrics indicate which build projects or phases take the longest time to run, which build projects are the most active over time, and which build projects fail the most before the code changes enter the release pipeline.

- The Repository activity metrics show the amount of coding activity in a repository. This information can help dev teams understand which repositories are the most active and optimize their development processes.

The benefits of cloud automation are well documented and include increased efficiency, agility and reduced costs. However, as your organization moves to the cloud, it is important to have a way to measure the effectiveness of your cloud automation efforts.

One way to do this is to develop metrics specifically for cloud automation. These metrics can be used to track how much time and effort is saved by using cloud automation tools and scripts. These metrics can also be used to measure the increase in agility that results from using cloud automation.

Return on Automation Investment

Continuous Delivery and DevOps are not just about tools, but automation is a key element in reducing repetitive work, or toil. Fully implemented decentralized DevOps can prevent the common dip in performance as well as having to wait six to twelve months to gain observable cultural capabilities.

Psychological safety: A team environment in which all members feel safe to take risks and express themselves openly is conducive to creativity and innovation. Members of a psychologically safe team are more likely to share information and ideas, and less likely to engage in destructive behaviors such as blaming or gossiping.

Cross-functional teams: By involving individuals from different disciplines, such as engineering, QA, and operations, in the software deployment process, companies can streamline the deployment process and ensure that all aspects of the system are tested and optimized.

Shared responsibility: By sharing responsibility for the success or failure of a software deployment among all team members, companies can create a sense of ownership and accountability. This can help ensure that everyone is working together towards a common goal, and that no individual is left holding the bag if something goes wrong.

Innovation: In order to keep up with the competition, companies need to be constantly innovating their software deployments processes. This may involve using new technologies or methods, or simply finding new ways to optimize the existing process.

Summary

As cloud computing and container use become more prevalent in software development, decentralized DevOps models are becoming increasingly popular.

However, implementing such a solution can be expensive, so some organizations choose to hold on to the software for a period of time after it is initially deployed.

Teams need pipelines for two main reasons: automation and best practices. Automation allows teams to move faster and reduces human error. Best practices ensure that code is written in a consistent and reliable manner.

The ideal cloud-native pipeline solution should be able to orchestrate the deployment of applications and services across multiple cloud providers and data centers. It should also be able to manage the lifecycle of these applications and services, and automate the deployment process.