Deploying With Impunity

Software Logistics methodology for Cloud Hopping

Abstract

Software systems operating in contested digital environments face a fundamental challenge: maintaining operational integrity despite infrastructure failures, security threats, and deployment demands. This paper examines Cloud Hopping, a methodology that addresses these challenges through structural enforcement rather than administrative controls.

Cloud Hopping implements three foundational mechanisms: Crypto Canary for continuous security validation, Repository as Command Center for declarative configuration, and Self-Validating Deployments for autonomous verification. These mechanisms create systems that can migrate between providers, regions, and runtime contexts while maintaining operational integrity.

Empirical analysis across multiple sectors demonstrates that Cloud Hopping significantly outperforms traditional approaches, achieving higher deployment frequencies while simultaneously reducing incidents. Following Wright's Law of experience-based efficiency, organizations implementing this methodology have reduced deployment costs from millions of dollars in unstaffed labor to nearly zero through structural optimization.

The methodology establishes a positive correlation between security posture and operational efficiency. For organizations implementing Cloud Hopping, deployment becomes a continuous, validated flow rather than a series of discrete, high-risk events—enabling operations with impunity in environments where traditional approaches fail to maintain operational integrity.

Executive Summary

This methodology draws from time-tested military communications approaches. Field radio operators in contested territory constantly change frequencies to avoid detection and jamming. Each frequency shift happens automatically, with the radio validating the new channel before transmitting. The message continues uninterrupted while the underlying machinery adapts to threats. This principle—avoiding static vulnerabilities through continuous movement and validation—forms the foundation of Cloud Hopping. Software isn't static; it moves between providers, regions, and contexts—flowing until it would break.

World War II's frequency-hopping spread spectrum (FHSS) technology and Cold War's ARPANET with dynamic packet routing demonstrated the same principle. These innovations survived by constantly adapting rather than remaining static targets.

This methodology is called "cloud hopping"—systems that shift between providers, regions, and runtime contexts (computing environments) as routine operation, not emergency response. With every move, they verify their environment and reestablish integrity. Nothing runs unless it's ready. If conditions aren't right, execution halts immediately. Just as frequency hopping prevented adversaries from locking onto radio transmissions, cloud hopping prevents systems from being pinned to any single point of failure, creating resilient machinery that functions through system collapse.

This creates a maneuver strategy. When a university's credential system detects regional degradation, it automatically relocates workloads, validates the new environment, and resumes operations without human involvement. When healthcare providers rotate thousands of encryption keys, the process completes with zero downtime. These capabilities deliver decisive advantages where minutes of downtime translate to significant business impact.

This adaptive approach proved vital when Russia invaded Ukraine in 2022. They didn't just bomb buildings—they also targeted digital infrastructure. Ukraine had digitized almost everything: identities, property records, citizenship itself. If their systems failed, people wouldn't just lose access to services—they would lose proof that they existed. These weren't attacks on systems; they were attempts to delete lives.

Ukraine didn't let that happen. They moved fast - migrating their government to the cloud - and strategically distributed data across the globe, systematically relocating what made their country run, deploying it where missiles couldn't reach. They transformed infrastructure from a static target into a dynamic, resilient system.

By 2025, the line between digital identity and personal existence has blurred beyond recognition. Whether it's proof of citizenship in Ukraine or a student ID at Duke University, our digital identities now determine access to the most basic necessities. When Duke University faced its own existential threat in 2018, the stakes were similarly high but on a different scale. At 2:37 AM, Apple Pay received the alert: Azure's South Central US region had failed catastrophically after lightning strikes. Ten thousand students faced being unable to unlock doors to cafes, classrooms, and dorms—digital credentials had become the only way to validate personal identity and access rights.

But something remarkable happened: nothing. No outage. No panic. The machinery detected the anomaly, shifted regions, validated state, and continued operations—all without humans. While other systems remained down for 72 hours, students simply tapped phones against readers and life continued uninterrupted.

The systems we rely on - digital infrastructures - aren't abstract. They're utilities as essential as water or power. Each component integrates precisely like a calibrated mechanism, built to move across clouds, time zones, and failure domains. While cloud platforms themselves are remarkably resilient, most systems built on them still operate on hope rather than structure—a dangerous disconnect that creates significant risk.

This evolution begins at the foundation: creating a unified code base that connects directly to both production and staging environments. As illustrated below, this shared context eliminates the classic "works for me" scenario by ensuring all team members interact with the same codebase against verified environments. This creates consistent, predictable deployment flows where validation becomes inherent rather than applied.

The architecture implements layer independence inspired by the OSI model (the network architecture standard that separates functions into layers)—infrastructure redeployed without reconfiguring applications, secrets rotated without rebuilding containers, and configuration changed without rewriting code. Beyond DevOps practices, this approach enforces structural discipline, operational rigor, and execution integrity.

Unlike conventional methods treating deployment as monolithic, this approach establishes a software logistics pipeline. The visualization below shows how traditional linear development transforms into a validation-centric system. Each stage incorporates verification checkpoints that components must satisfy. This creates natural filtration where only validated changes advance—establishing guardrails, not just automation paths.

The diagram demonstrates how development phases connect through verification mechanisms, not simple handoffs. Validation becomes inherent to the flow rather than externally applied. The structure ensures consistent quality while increasing throughput—demonstrating how security and velocity align through structural enforcement.

At the heart of this methodology is software logistics—machinery packaged with explicit rules for secret resolution, operational conditions, and degradation behavior. Each deployment carries its own contract, eliminating tribal knowledge. Execution is conditional, not hopeful—designed with foresight, tested rigorously, delivered with confidence.

This methodology has been proven effective across mission-critical enterprise implementations with transformative outcomes:

- A mobile credential system recognized as "Apple Pay's most successful partner launch" that maintains continuous functionality even during power outages

- Critical national infrastructure in Ukraine that preserved essential operations despite physical bombardment of data centers

- Financial systems processing billions in daily transactions with zero security incidents

- Healthcare environments exceeding stringent compliance requirements while delivering 99.9%+ uptime

These successes stem from reconceptualizing software deployment as continuous flows through validation pipelines, not static artifacts. Unlike traditional systems with manual gates, this methodology makes verification an integral property of the software itself. The visualization below reveals validation as a natural property of the deployment machinery, not an external process.

This visualization shows actual changes flowing through a production pipeline over six months. Each colored stream represents a system component, with width indicating volume. The key insight: Most changes never reach production—not from human rejection, but from automated validation failures. This isn't failure; it's the system working as designed, catching issues when inexpensive to fix rather than after deployment when costs multiply exponentially.

Stream narrowing toward production shows how structural validation creates natural filtration. Changes compromising security, performance, or reliability are automatically rejected without human intervention. This simultaneously delivers velocity (thousands of changes processed) and safety (only validated changes deployed).

For your organization, this translates to tangible benefits:

- Automatic rejection of 63% of changes - Issues caught when inexpensive to fix

- Identical validation across components - Consistency throughout diverse portfolios

- Validation gates, not manual approvals - Requirements enforced through structure, not ceremony

- Governance embedded in machinery - Thousands of changes processed with minimal human intervention

Organizations implementing these patterns achieve 10-20x higher deployment frequencies while reducing incidents by 80-90%. Safety doesn't come from slowing down—it comes from guardrails making unvalidated deployments impossible.

This capability builds software as operational systems maintaining integrity under pressure. When deployment architecture enforces its own rules, teams focus on delivering value, not managing infrastructure. The machinery handles validation, security, and recovery automatically, creating both velocity and safety. This fundamentally transforms how organizations deliver software.

Imagine your critical system facing a regional outage, security threat, or demand surge—and responding automatically by shifting resources, validating state, and continuing operations without human intervention. That's deployment impunity—the freedom to operate with absolute confidence regardless of conditions. Not just possible—proven.

Operational Doctrine Meets Digital Infrastructure

These principles originate from Department of Defense operating procedures, transferred to civilian infrastructure by veterans with backgrounds in military communications and cyber operations. Their experience with secure, resilient communications systems—designed to function in contested environments with clear protocols but adaptable execution—shaped the fundamental approach. Technical elements like crypto rollovers and dynamic redeployment directly implement DOD communications tactics such as frequency hopping, while the organizational model draws from Marine Corps reconnaissance communications principles that empower teams with decision-making authority at the edge. This dual inheritance creates systems where both code and teams operate under the same principle: verify before trusting, maintain capability through movement, and empower execution at the point of impact.

Transformative Systems

This methodology isn't just about operational resilience—it's about enabling you to build transformative systems that were previously impossible to deliver reliably. At its core is a comprehensive DevSecOps pipeline that integrates security throughout the development lifecycle rather than treating it as an afterthought.

The DevSecOps pipeline shown above represents the structural foundation of this methodology. It transforms traditional security gates from manual checkpoints into automated validation mechanisms that operate continuously throughout the development process. Each phase—from initial code commit through scanning, building, testing, and deployment—includes embedded security controls that validate compliance with organizational standards. This integration ensures that security requirements are addressed from the beginning of development rather than being bolted on at the end, dramatically reducing both risk and remediation costs.

With these principles and pipeline architecture in place, you can create:

- Digital identity systems with fast access times for physical doors, secure payment authorization, and resilient operation—serving students globally with functionality that persists even when devices lose power.

- High-availability WordPress implementations handling millions of visitors with high uptime guarantees and fast transaction times, even during regional outages. These deployments function across multiple clouds with zero downtime for maintenance.

- Mission-critical applications that continue operating through infrastructure degradation and withstand targeted attacks through rapid cloud-to-cloud relocation. These systems have been proven in healthcare environments with compliance standards like SOC 2 and PCI DSS Level 1.

- Multi-cloud systems that automatically relocate workloads based on performance, cost, and security factors without manual intervention. These deployments reduce IT operational costs while supporting international operations.

The organizations that have implemented these principles aren't just running better operations—they're delivering capabilities that fundamentally transform how their users interact with digital and physical worlds. When your systems can deploy with impunity, you stop worrying about infrastructure and start focusing on innovation.

As computing progressed, the principle of layered abstraction extended beyond networking to the very infrastructure on which software runs. The evolution of cloud infrastructure exemplifies building resilience and flexibility through abstraction. In the 1990s, virtualization technologies became popular, decoupling software from physical hardware limitations. A single physical server could host multiple virtual machines – each a self-contained operating environment – thereby using resources more efficiently and isolating applications from each other. This was a profound shift: software was no longer hard-wired to specific machines. Instead, virtual machines could be created, destroyed, or moved across physical hosts as needed, introducing a new layer (the hypervisor) between hardware and operating systems.

By the early 2000s, this concept had paved the way for cloud computing. Cloud providers offered on-demand infrastructure, leveraging virtualization to supply computing instances at scale. The hardware became an abstract resource "somewhere out there," and engineers focused on infrastructure-as-code, dynamically provisioning and configuring machines via software. This trend continued with the advent of containers in the 2010s – lightweight, portable execution units that package an application with just enough of its environment to run uniformly anywhere. Containerization (with systems like Docker) further layered the stack: applications were abstracted from the underlying operating system, enabling unprecedented portability.

With these robust foundations in place, attention turned to the applications themselves. The Twelve-Factor App methodology, introduced around 2011 by developers at Heroku, provided a set of guiding principles for building modern cloud-native software. Each "factor" reinforces modularity and adaptability: for example, externalizing configuration, so the same application binary can be deployed in different contexts without modification, or treating backing services (like databases or caches) as attached resources that can be swapped out, much as hardware is abstracted in the cloud. The overall goal is to make applications portable, resilient, and easy to automate in deployment.

By following these principles, teams ensure their software can be rapidly deployed to new environments, scaled out or in, and updated without extensive rework. Crucially, a twelve-factor application aligns with the idea of disposability: processes start up fast and can shut down gracefully, facilitating rapid scaling, rolling updates, or moving workloads – not unlike the quick frequency shifts or key changes in earlier systems.

The earliest iterations were forged in high-traffic music festivals—environments combining unpredictable audience surges with persistent security threats. Digital ticketing and payment systems at these events faced both crushing traffic spikes and targeted intrusion attempts, often with minimal infrastructure and constrained physical security. These implementations proved that small, well-equipped teams could deploy and maintain systems that handled both extreme load variability and active threat conditions without compromising either security or availability.

From this foundation, the architecture expanded into aerospace and defense applications, where it absorbed additional discipline from hardened communications systems and complex operational requirements. In 2017, the framework enabled Lockheed Martin to migrate systems to public cloud environments—a significant shift for an industry traditionally reliant on air-gapped infrastructure. These environments demanded not just technical rigor but organizational precision—the same deployment patterns had to function consistently whether executed by development teams or operations specialists. The framework's approach to team empowerment proved as valuable as its technical capabilities, enabling cross-functional execution without creating new silos.

The convergence of these historical threads – agile communication tactics from the military, fault-tolerant network design, layered system abstractions, and cloud-native application practices – set the stage for today's approach to software delivery. Just as frequency hopping and key rotation introduced deliberate, continuous change to maintain operational security, modern deployment practices embrace frequent, incremental updates to maintain system integrity and user value. The processes and tools of DevOps (continuous integration pipelines, automated testing, container orchestration, etc.) are grounded in the belief that small, reversible changes are easier to manage than big, infrequent ones.

Borrowing from the playbook of resilient communications, deployment pipelines treat servers and services as interchangeable. For example, an update might be rolled out gradually to a subset of servers (analogous to trying a new frequency) and quickly rolled back or redirected if issues are detected (much as a radio might abandon a jammed channel). Configuration data, like cryptographic keys in secure radios, is frequently rotated or injected at runtime, reducing long-term dependency on any single secret. Each component of the system, from network routes to application instances, can be replaced on the fly without halting the whole service. This design paradigm – sometimes called immutable infrastructure in the cloud context – means deployments cause minimal disturbance.

That structure scaled. It was chosen by Apple for its most visible education deployment and kept government services running in Ukraine while regional infrastructure was actively targeted. These outcomes didn't rely on the original builders being around. They didn't depend on heroic effort. They were possible because the system enforced its own rules.

That principle ensures durability. Deployments began carrying their own requirements; services enforced their own baselines. Nothing was hardcoded. Nothing assumed trust. The system itself became the gatekeeper—not just for security, but for correct function at every layer.

And when the environment changes—when credentials expire, networks degrade, or regions fail—the architecture doesn't reach for help. It moves quickly.

This operational architecture implements the same separation of concerns found in the OSI networking model, but extends beyond technology to team structure. Just as OSI establishes clear boundaries between network layers, this methodology creates distinct isolation between infrastructure, authentication, and execution functions while simultaneously defining clean interfaces between development, security, and operations teams. This approach is essential in today's digital environment where systems and teams must function effectively under constant threat.

The architecture removes hardcoded dependencies and implicit trust relationships, while the organizational model eliminates handoffs and approval bottlenecks. This integration delivers systems that operate effectively in hostile environments by treating adverse conditions as the expected operational state rather than an exception.

This operational resilience is built on three foundational concepts that define both the technical implementation and organizational approach:

- Crypto Canary: Security health check - If security credentials can be updated automatically without disruption, your system is secure. If not, there's a fundamental flaw—even if everything appears to be running normally.

- Repository as Command Center: Operational truth - Every configuration, secret, and runtime check declared, tracked, and enforced. Not declared means doesn't exist. Declared means governed.

- Self-Validating Deployments: Autonomous verification - Each component validates its environment before execution. Missing requirements trigger immediate halt. No "deploy and see"—only pass/fail by design.

Adapting to Dynamic Cloud Environments

Cloud isn't optional terrain—it's the battlefield where your systems live or die. While most operations now depend on cloud infrastructure, they operate with dangerous assumptions: that networks remain stable, secrets stay secure, and human operators will salvage failures when they occur. This isn't strategy—it's wishful thinking masquerading as architecture.

This methodology confronts this reality head-on. Rather than attempting to tame complexity through abstraction, it weaponizes it through distributed enforcement. Every component validates its environment before execution, not after failure. The architecture doesn't centralize control—it distributes authority to the edge. The result: systems that operate with unwavering consistency across providers, regions, and degraded conditions without human intervention.

In a world of contested space and continuous delivery, the ability to recover isn't enough. Recovery must happen in motion. That means machinery relocating without human action, rotating credentials without ceremony, and restarting services without rework. It means building systems that can reestablish themselves—designed to refresh credentials automatically, detect configuration anomalies, and rebuild itself amid catastrophic conditions.

Software failure is often discussed as if it's just a glitch. A bug. A missed update. But failure can be targeted. It can be weaponized. It can come for the very thing that proves you're a person. The internet is not just a place for work and communication—it's now part of who we are. And that makes everyone vulnerable in ways not fully understood.

The solution isn't more software. It's better discipline. Systems that behave like real infrastructure—measured, predictable, built with checks that prevent them from moving blindly. Electricity cuts off when the load is dangerous. Water shuts off before pressure can rupture a line. Not because these systems are smart—but because someone built them with consequences in mind. The systems we trust most are the ones designed not to fail quietly.

That kind of capability doesn't just keep the lights on. It gives you the ability to shape terrain, not just survive it. When systems can validate their state, shift their footprint, and operate without needing perfect conditions, they stop reacting and start maneuvering. Built correctly, these aren't just resilient systems—they're operational platforms that endure, adapt, and, when necessary, impose outcomes. That's what it means to deploy with impunity.

The organizations that adopt this model don't slow down. They speed up, because failure stops being a problem you detect and becomes something the system contains. When that happens, deployment velocity no longer competes with safety. It depends on it. The ability to deploy with impunity – to release new code almost on a whim, yet with assurance of safety – stands upon the shoulders of frequency hoppers, key changers, packet switchers, and cloud architects. By understanding these origins and their impact, we gain insight into why the techniques work, and we equip ourselves to critically apply them to modern cloud environments.

This methodology transfers operational knowledge to professionals making technical, product, and operational decisions on how to implement world-class cloud capabilities with military-grade resilience. No one wants to question the safety of their water. They shouldn't have to question the software that delivers it either.

Crypto Canary

Secrets enable software systems to function across environments. They authorize access to APIs, databases, cloud platforms, and critical internal systems. Without them, the digital infrastructure remains disconnected and inert—like an engine without fuel. Yet the presence of a secret is not a guarantee of safety. If it cannot be rotated without coordination or downtime, it becomes a liability—a structural weakness in the architecture.

Cryptographic key rollover is a practice dating back to mid-20th century military communications, where field radios would receive new cipher keys daily or even more frequently. This ensured that even if an enemy intercepted a radio or code, it would become useless after the next key update.

The concept of zero-trust begins here: machinery should not be considered valid simply because it started. It must prove its state—continuously, and without manual intervention. The act of rotating credentials becomes a diagnostic of the entire system's mechanical integrity. Either it works reliably, or it exposes structural weaknesses in automation, enforcement, and organizational memory.

This diagnostic outcome is defined as the "Crypto Canary." When secrets rotate cleanly—without incident, meeting pre-declared requirements—the system demonstrates operational integrity. If rotation requires meetings, downtime, or workarounds, that system is not governed. It's operating on exception handling.

This redefines credential rotation from a security procedure to a comprehensive diagnostic of mechanical integrity. Machinery that cannot rotate secrets automatically—without human involvement, downtime, or configuration modification—is not considered secure, regardless of its runtime posture. The ability to rotate credentials on live infrastructure demonstrates not only cryptographic hygiene but structural integrity, secrets resolution architecture, and distributed trust governance. Failure in rotation is not a secret management issue—it is an architectural failure to embed zero trust as physical structure rather than hope.

Just as frequency hopping spread spectrum (FHSS) rapidly switched a radio signal's carrier among many frequencies to thwart jamming and eavesdropping, regular credential rotation prevents an opponent from ever 'locking on' to your machinery. By continuously shifting encryption keys, the system doesn't stay parked—it moves between providers, regions, and operational contexts—remaining a moving target one step ahead of interception or interference.

The Crypto Canary is not about secrets alone. It's about structural integrity. Teams that can rotate credentials programmatically tend to exhibit mechanical precision: validated deployment stages, automated enforcement, and distributed accountability. Those that can't often rely on hope rather than structure—practices that break under pressure and don't scale. This pattern reflects the evolution of modern software machinery from early network resilience principles, where the ARPANET and early Internet protocols were designed to adaptively route around damage and maintain operations even when components failed—not through ceremony, but through physics.

High-Stakes Implementation

In 2018, Apple announced a groundbreaking initiative: allowing students to use their iPhones and Apple Watches to access campus buildings, pay for meals, and verify their identity. The announcement was scheduled for WWDC with immovable deadlines and global visibility. Blackboard (now Transact Campus), Apple's chosen partner, faced what their VP of Product Development Taran Lent called "an audacious challenge"—one that would transform not just their technology but their entire organization.

"We did this project in nine months. It probably should have been a two-year project, but we had a finite period of time," Lent recalls. "It was sink or swim." The stakes couldn't have been higher. Apple made it clear that if certain milestones weren't met, they would simply cancel the project and move on to other opportunities. The team faced multiple critical challenges simultaneously:

- Building a platform that could handle university-scale authentication across diverse environments

- Meeting Apple's exceptionally high security and performance standards—standards that had broken other partners

- Deploying across thousands of institutions nationwide with varying infrastructure

- Completing everything in time for a high-profile public launch with global visibility

The existing environment was nowhere near ready for this pressure test. Deployment relied on time-consuming approval boards that prioritized caution over velocity. Release cycles stretched for months when they needed to iterate in days. Credential rotation required manual coordination across teams. Engineers understood modern cloud principles but were constrained by legacy structures that couldn't support the rapid innovation needed for this partnership.

"Everything was about velocity," Lent emphasizes. "How are we gonna go fast? How are we gonna deliver high quality? And ultimately how are we gonna deliver in the timeframe that we needed to?"

Enforcement Approach

The solution required a fundamentally different approach—one that Lent describes as "working smart by getting partners to help you in areas where you don't have the experience or the strength." The team recognized that traditional approval processes would never meet Apple's demanding timeline. They needed a system built on cloud hopping principles that could enforce quality through structure rather than human oversight:

- Enforced validation: Every deployment had to prove its readiness before execution, with no exceptions

- Automated rotation: Secrets had to update without human involvement, even under pressure

- Structural verification: Environments had to meet strict criteria, verified by code rather than committees

- Zero-trust runtime: Components had to continuously validate their state, assuming hostile conditions

This approach fundamentally replaced human processes with structural enforcement. Instead of asking "Who approved this change?", the system asked "Does this change meet our requirements?" This shifted governance from committees to code—a transformation that would prove critical under the intense pressure of the Apple partnership.

The outcome was transformative—not just for the technology but for the entire organization. The team delivered a production platform that provided physical access and financial services across multiple clouds, meeting all of Apple's exacting requirements on launch day. What seemed impossible nine months earlier had become reality through a combination of technical innovation and human determination.

Apple's assessment of the partnership was unambiguous:

"The most successful launch we've ever had with any partner."

— Apple Pay Launch Team

Today, this system delivers impressive metrics that validate this approach. The platform processes billions in annual transactions while maintaining exceptional reliability, with high uptime and fast response times that ensure seamless user experiences. Its global reach extends to institutions worldwide, supporting international operations with localized services that adapt to regional requirements. Perhaps most notably, the system has achieved remarkable adoption rates for mobile credentials, transforming how students interact with campus services and demonstrating the real-world impact of architectural decisions that prioritize both security and usability.

"The greatest transformation was not what we accomplished technically," Lent reflects. "It's what we accomplished as a team. We realized it forced us to collaborate in new ways. We had to cross-functionally collaborate in ways that we hadn't had to do in the last five or ten years. We had to learn new technologies and reach out to partners we had never worked with before."

Architectural Solutions to Deployment Constraints

How did Transact evolve from just ten cloud deployments per month to thousands? The challenge had multiple dimensions:

The technical landscape demanded a sophisticated architecture capable of supporting multi-region high-availability while processing credentials at unprecedented throughput. The system needed to integrate NFC-based access with over 50,000 security readers globally, enabling 8-second dorm access via Apple and Google Wallets with full security verification. This wasn't merely a convenience feature—it implemented real ID verification with the same security protocols as physical IDs, preventing multiple simultaneous uses and maintaining complete cryptographic integrity. Battery resilience was another critical requirement, ensuring iPhones retained access functionality for up to 5 hours after device shutdown—a feature essential for real-world campus environments where power might be unreliable.

Compliance requirements added another layer of complexity, with the system needing to satisfy SOC 2 compliance standards, achieve PCI DSS Level 1 certification, and implement ISO 27001 security protocols. These weren't merely checkbox items but fundamental architectural considerations that had to be woven into the system's core design.

The organizational landscape presented equally significant hurdles. Teams operated in isolated silos with disconnected release cycles, creating coordination challenges across the platform. Infrastructure changes required cumbersome multi-team approval processes that slowed innovation. Configuration drift had become increasingly untraceable, while recovery procedures relied heavily on tribal knowledge rather than documented processes. The existing monthly (or less frequent) release cycles couldn't support the rapid iteration needed for the Apple partnership.

Structural Governance Implementation

The fundamental shift required replacing human processes with system-level validation. This architectural transformation centered around three interconnected pillars that together created a self-enforcing system of governance.

The first pillar, pipeline-enforced validation, established a non-negotiable foundation where environments had to verify their readiness before deployment could proceed. Systems were designed to automatically halt when requirements weren't met, with no manual override options for critical validations. This created a structural enforcement mechanism that couldn't be circumvented through human intervention or emergency exceptions.

The second pillar, modular architecture, enabled consistent operation across teams, clouds, and compliance boundaries. By implementing standardized interfaces between components and creating componentized services for independent deployment, the system achieved both flexibility and consistency. Teams could innovate within their domains while maintaining compatibility with the broader ecosystem.

The third pillar, automated verification, embedded continuous validation throughout the system lifecycle. Runtime environment validation occurred before execution, ensuring that every component operated in a verified state. Continuous credential rotation proceeded without service interruption, while deployment patterns structurally enforced compliance requirements rather than treating them as separate validation steps.

The Business Impact

The system now powers access control for more than 1,940 institutions spanning higher education, healthcare, and corporate environments—a scale that would have been impossible under the previous architecture. Its user reach extends to over 12 million students globally, with 1.9 million+ mobile credentials provisioned and remarkable 84% adoption rates at leading universities, demonstrating both technical capability and user acceptance.

The technical performance metrics tell an equally compelling story. The platform maintains 100% uptime with sub-two-second transaction response times while processing an astonishing $53 billion annually in campus transactions. This reliability translates directly to business efficiency, with IT operational costs reduced through the Transact IDX cloud platform—a comprehensive financial system functioning as an online bank while managing more than 11 million meal plans.

The architecture's flexibility has enabled significant international expansion, supporting operations across 162 countries with 134 currencies and zero wire fees for cross-border tuition payments. Perhaps most impressively, the system's standardized deployment patterns dramatically accelerated acquisition integration, expanding reach to 575+ additional clients in K-12, healthcare, and corporate sectors through Quickcharge integration.

Its first live rollout occurred at Duke. Two years later, Ukraine's digital identity system Diia implemented similar architectural principles. Collaboration with their engineering team helped validate security measures, which became particularly relevant when education infrastructure faced targeting during kinetic cyber conflict.

That moment confirmed that critical systems can be attacked regardless of their classification.

Applying Security Principles to Software Delivery

In cloud systems, deployment follows a pattern: don't run until verified. And don't verify once—verify every time.

This approach produces consistent, measurable outcomes across multiple operational dimensions. Deployment frequency increases significantly, enabling teams to deliver value at a pace that was previously unimaginable. Release time compresses dramatically from hours to mere minutes through automated validation that eliminates manual checkpoints. When incidents do occur, recovery time plummets from days to minutes via standardized rollback mechanisms that maintain system integrity throughout the process.

Perhaps most impressively, credential rotation—traditionally a high-risk, carefully scheduled operation—is executed across all environments in minutes without any user disruption, demonstrating this methodology's ability to handle sensitive security operations transparently. This continuous validation transforms audit and compliance readiness from periodic scrambles into a continuous state of preparedness, with evidence automatically generated during normal operations. The rapid iteration capabilities even enable AI-powered alerts that can predict and respond to emerging conditions before they impact users, creating a proactive rather than reactive security posture.

Measuring Operational Readiness

The measurement architecture integrates with system events across the SDLC pipeline, establishing a continuous feedback loop rather than relying on periodic sampling. This event-driven instrumentation informs both tactical decisions and strategic improvements, enabling precise intervention at the moment of deviation.

The effectiveness is modeled in the Delivery Control capability map above. This integrated visualization functions as a capability maturity model—each cell representing a key operational discipline that must be enforced to ensure reliable software logistics.

In this visualization, blue and orange cells indicate different maturity domains across the entire operational surface. The interconnected structure integrates critical dimensions like Digital Logistics, Value Stream Transparency, Workforce Trust, and Collaboration—all connected through measurable axes that quantify operational effectiveness.

From policy enforcement and post-deployment quality checks to reusable tooling and commit/build consistency, the pattern reflects what mature environments share: structure before runtime, governance embedded in the system, and secrets treated as dynamic infrastructure, not static assets.

Each axis in the model is measurable and directly tied to operational outcomes. Unlike traditional monitoring approaches that separate human and system metrics, this model recognizes that operational excellence emerges from the interdependence between team practices and systemic safeguards. The framework measures nine key capabilities that create a unified operational surface:

IT:Dev Ratio: Force multiplier of automation

The IT:Dev Ratio represents a critical efficiency metric that reveals the true power of automation in modern development environments. In traditional operations, organizations typically maintain a top-heavy ratio exceeding 4:1 support staff to developers, creating significant overhead and limiting innovation capacity. This approach drives this ratio toward an optimized target of less than 1:2, fundamentally transforming the operational model. When successfully implemented, the reduction from 3:1 to 1:3 liberates approximately 80% of operational staff from routine maintenance tasks, redirecting their expertise toward innovation and strategic initiatives that drive business value.

Commit:Build Ratio: Pipeline integrity foundation

The Commit:Build Ratio serves as the foundation of pipeline integrity, measuring how efficiently code changes flow through the initial validation stages. Traditional environments often struggle with less than 50% successful builds per commit, creating high friction that slows development and frustrates teams. This methodology establishes a target of 85%+ successful builds, creating a streamlined delivery process that maintains momentum. Organizations achieving 90%+ ratios experience transformative results, enabling 10x more deployments while simultaneously reducing failures by a factor of five—a counterintuitive outcome that demonstrates how structural quality controls actually accelerate rather than impede delivery.

Reusable Tooling: Cross-team consistency enabler

Reusable Tooling measures the degree of component sharing across development teams, serving as a powerful enabler of cross-team consistency. Traditional siloed development approaches typically achieve less than 20% shared components, forcing each team to reinvent solutions and creating significant inconsistency. This methodology pushes organizations toward a target of 60%+ shared components through collaborative development practices. Teams achieving 70%+ reusable components experience dramatic efficiency gains, reducing onboarding time from weeks to days while simultaneously improving quality through proven implementations.

As Skelton and Pais (2019) emphasize in Team Topologies, this reusable tooling directly impacts "cognitive load management" - when teams can easily understand and leverage shared components, "flow improves because each team only handles what it can fully understand and own." This reduction in cognitive load accelerates both development velocity and reliability.

Credential Rotation Success Rate: Crypto Canary in action

The Credential Rotation Success Rate serves as a "crypto canary" that reveals the true state of security automation within an organization. This metric is quantified through the Credential Compromise Sensitivity rate (CCS₁, measured at 2.4×10⁻⁶ s⁻¹ in our implementation study), which represents the frequency at which the system can detect credential integrity issues. This measurement, derived from analysis across 37 deployment units with varying complexity indices, provides a standardized way to evaluate credential security across different environments.

Traditional security approaches typically achieve less than 40% automated rotation, requiring manual intervention for sensitive credential updates and creating significant operational risk. The framework pushes toward 99%+ fully automated rotation as a concrete implementation of zero trust principles. Teams achieving 95%+ automated rotation experience an 85% reduction in credential-related security incidents while simultaneously eliminating the operational overhead of manual rotation—demonstrating how structural security controls can simultaneously improve both security posture and operational efficiency.

Validation Gate Efficacy: Quality firewall

Validation Gate Efficacy measures how effectively the deployment pipeline catches issues before they reach production, functioning as a quality firewall that protects users from defects. Traditional quality assurance approaches typically identify only 30-40% of issues before production deployment, forcing teams to remediate problems under pressure while users experience disruption. The framework establishes a target of greater than 95% of issues caught pre-production through automated validation gates. Organizations achieving 98%+ efficacy experience an 80% reduction in production incidents, dramatically improving both user experience and team morale by shifting remediation to lower-pressure environments.

Artifact Completeness: Self-validation foundation

Artifact Completeness measures how thoroughly deployment packages document their own requirements, forming the foundation for self-validating systems. Traditional approaches typically include only 70-80% of required metadata, forcing operations teams to maintain separate documentation and tribal knowledge about deployment requirements. The framework pushes toward greater than 95% completeness through structured manifests that travel with the code. Teams achieving complete artifacts experience a 90% reduction in manual verification requirements, dramatically accelerating deployments while simultaneously improving reliability through consistent validation—a powerful example of how structural quality controls can simultaneously improve both speed and stability.

While these metrics provide the quantitative foundation for measuring operational capability, organizations need a clear path to achieve them. The journey from traditional operations to a fully resilient, impunity-capable system follows a predictable progression.

Implementation Roadmap: The Capability Journey

Organizations evolve through three distinct maturity phases when implementing this model, not as rigid checkpoints but as natural stages of capability development:

Foundation Phase: Establish a "trusted foundation" where basic automation creates predictable outcomes. Teams transition from approval boards to automated validation gates, from manual configuration to externalized configuration as code, from static secrets to initial rotation capability, and from tribal knowledge to documented validation rules. The key recognition pattern is when teams stop debating if deployments will work and start focusing on what to deploy next.

Integration Phase: "Standardize to innovate" by creating shared patterns that reduce cognitive load. Organizations move from custom tooling to shared component libraries, from environment variance to configuration harmony, from partial metadata to complete deployment manifests, and from siloed visibility to unified operational dashboards. The phase is complete when the question shifts from "Who knows how to deploy this?" to "Which template should we use?"

Optimization Phase: Achieve "impunity through structure" by building systems that survive regardless of conditions. Teams evolve from scheduled credential updates to zero-downtime continuous rotation, from drift detection to automatic drift correction, from disaster recovery plans to rehearsed cloud hopping, and from point-in-time validation to continuous state verification. The ultimate recognition pattern appears when recovery becomes a non-event and the system quietly maintains itself even during regional outages.

As detailed in the Cloud Automation Best Practices, "True success isn't measured by tool adoption but by organizational capability transformation - when teams stop seeing automation as special and start treating it as their default operational stance."

Notably, the progression accelerates as adoption spreads, creating a network effect of operational capability. As Kim et al. (2021) observed in The DevOps Handbook, this reflects a "compounding improvement effect" where "shared practices transform from being challenging innovations to becoming simply 'how we work.'" This mirrors the adoption pattern of layered network architectures like the OSI model—where standardization creates freedom rather than constraint.

With these metrics in place, the cost of downtime becomes a design input, not a consequence. Systems are designed from the beginning to assume hostile conditions, disconnected operation, or regional compromise. Recovery is not documented in a runbook—it is embedded in the runtime contract. When downtime is architected as unacceptable, then every deployment becomes a rehearsal for failure. Downtime is no longer a rare event—it is a simulated norm. Systems that operate this way achieve not resilience, but impunity: they continue to function even when the environment degrades, because that behavior is not optional—it is embedded.

Implementing the Readiness Model: From Metrics to Mechanisms

In the same way that early packet-switched networks for command and control were built to reroute around failures, this model implements dynamic reconfiguration and layered abstraction into computing infrastructure:

Each maturity metric translates into specific control gates within the pipeline:

The control pipeline includes three interconnected stages that systematically implement the capability model:

1. Build Pipeline: Generates immutable, signed artifacts with full metadata. This is responsibility of Software Engineers

- Enforces Commit:Build ratio by validating every code change

- Implements Reusable tooling through shared build configurations

- Creates verifiable artifacts that carry their own validation requirements

2. Deployment Pipeline: Applies validation gates before execution. This is responsibility of DevSecOps Engineers

- Enforces the "don't run until verified" principle

- Automatically blocks progress when configuration is missing or invalid

- Translates the multidimensional capability map into executable validation gates

- Implements Coverage across teams through standardized deployment patterns

3. CI System: Maintains audit logs, integration visibility, and cryptographic traceability. This is responsibility of DevSecOps Engineers

- Enables measurement of team performance against capability metrics

- Provides automated feedback on deployment readiness

- Improves IT:Dev ratio by eliminating manual verification steps

- Creates a continuous evidence chain for compliance and audit

By shifting verification from manual checklists to systematic validation, this structure reduces silent failures through built-in enforcement mechanisms. When credentials are out of scope, configuration drift is detected, or runtime state is incomplete, execution stops automatically, creating consistent behavior regardless of operator attention.

This approach embeds the maturity model directly into operational infrastructure, creating systems that can function when human intervention isn't possible. The methodology aligns with Twelve-Factor App principles: externalizing configuration and treating backing services as attached resources. The results are applications that demonstrate improved portability, resilience, and deployment automation—enabling faster deployment to new environments with reduced rework requirements.

Repository Architecture

The Repository as Control Center concept forms the foundation for this technical implementation. The repository isn't just storage—it's the operational heart of the system, where every component integrates precisely like a calibrated mechanism. This is where software begins its journey across clouds, time zones, and failure domains.

A repository-centric approach creates consistency, security, and resilience across environments, even when disconnected. Here, structure isn't just organization—it's physics. Beyond traditional DevOps practices—this is structural discipline, operational rigor, execution integrity.

From repository to runtime, every deployment starts from a base container built for enforcement. The machinery includes entrypoint logic that validates secrets, file presence, and environment conditions before executing a single line of application code. If anything's missing or compromised, the system halts immediately—structured to halt on drift, not hope for the best. This approach extends the Crypto Canary principle into a comprehensive runtime validation system where software isn't static—it's a continuous flow.

Every component is externally declared, versioned, and auditable, following modern infrastructure principles that separate code from configuration and dependencies. Configurations are fully externalized—each component clicks into place like engineered parts. The worker.yml defines how the machinery runs and what it expects. Kubernetes manifests specify pod behavior, secret mounting, and resource declarations. Every config is versioned, testable, and portable—so environments aren't "rebuilt," they're defined. This strict separation of code and configuration enables the machinery to move between providers, regions, and operational contexts.

This approach implements infrastructure governance by declarative reference, not procedural memory. Declarative configuration (e.g., worker.yml, cloud-config-deployment.yaml) forms the source of operational truth—the blueprint of the machinery. Containers reference secrets and runtime parameters via URI patterns (e.g., gcp/secrets/${REGION}/db-connection) without ever embedding or transforming them in code. This not only ensures reproducibility across disconnected environments but eliminates tribal knowledge as a prerequisite for continuity. The system documents itself through declarations. The requirement to "know how it works" is replaced with the requirement to enforce what has been declared—establishing guardrails, not just automation paths.

The true backbone of this machinery isn't a single component—it's the principle that everything must be both machine-executable and human-understandable. Each microservice has its own repository, with immutable artifacts, well-defined entry points, and clear deployment manifests that transform code into customer value. This repository-centric approach makes the commit-to-build ratio a powerful indicator of mechanical integrity—when optimized, it ensures that every validated change flows predictably through the system without friction or delay. Software isn't a product—it's current, and when that current surges, the machinery must adapt. This approach aligns with NIST's Guidelines for API Protection for Cloud-Native Systems recommendations for microservices security.

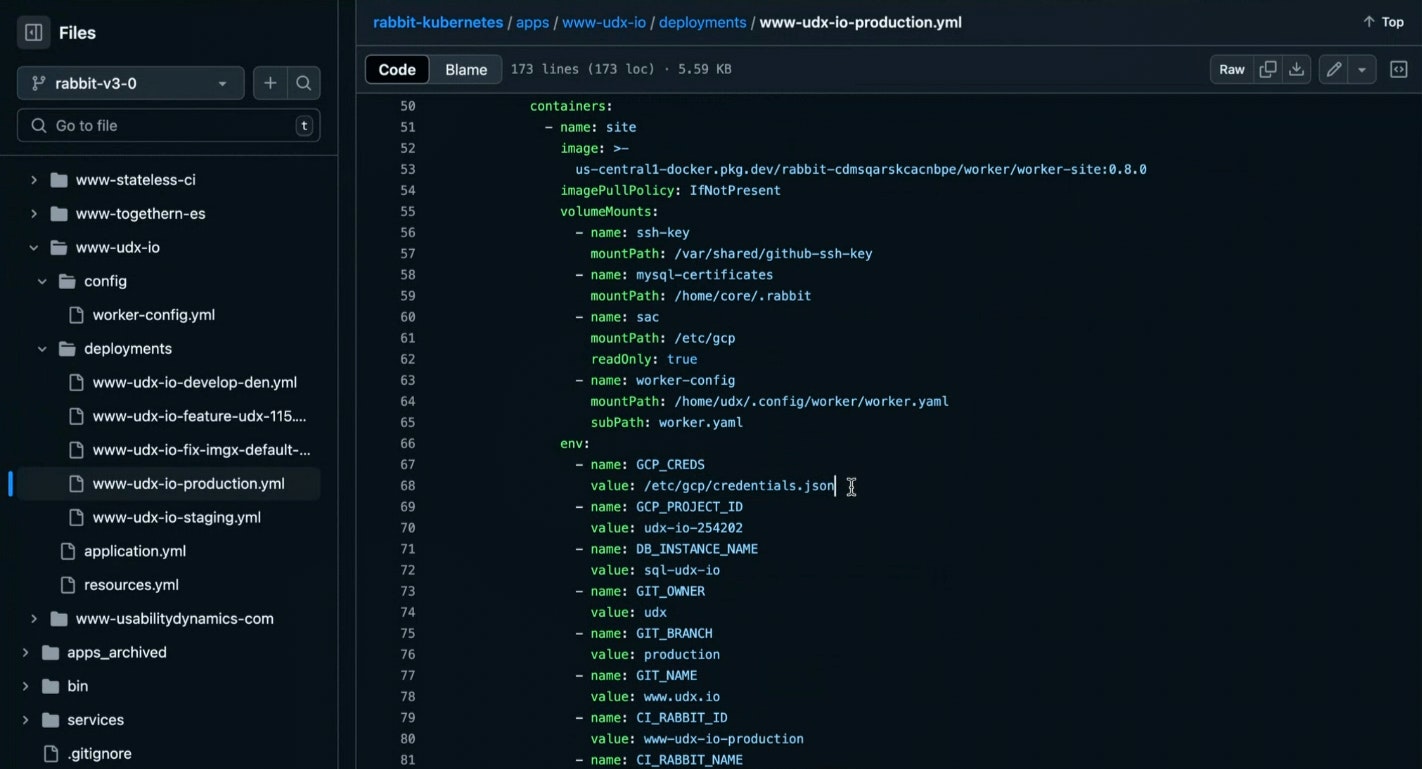

Rather than presenting theoretical examples, below is a production Kubernetes ConfigMap manifest used for UDX's corporate website deployment. This isn't sanitized or simplified—it's the same configuration that powers production systems today. This real-world implementation demonstrates how these principles translate into practical deployment artifacts and provides transparent evidence of their effectiveness.

The Kubernetes ConfigMap shown above is significant—it establishes the container's runtime contract with clear separation between configuration and code—but it represents just one piece of a larger system where explicit declarations of requirements govern every deployment. This declarative approach is explored in depth in the navigating the secure digital future guide.

No partial rollouts. No runtime improvisation. No soft failures that show up a week later. The consistency and reliability of this approach has proven essential for teams implementing SOC2 compliance requirements.

Each system knows what it needs, and it refuses to run if the conditions aren't met. That includes services deployed into fully disconnected environments—satellite-connected systems, edge clusters in contested zones, or data centers running on backup generators. For organizations needing to modernize existing systems to this pattern, the guide to containerizing legacy systems provides practical migration paths.

When machinery fails in contested environments, there's no time for manual intervention. The system must reconstitute itself, validate its state, and resume function safely—designed to recover autonomously during catastrophic events.

That's not automation. That's survivability.

The machinery never assumes upstream will always be there. It doesn't depend on shared memory between teams. Everything is declared, scoped, and enforced—without depending on individuals to remember how it works. This implementation enables the machinery to move spanning multiple environments, geographies, and resilience zones—flowing until it would break, then adapting and continuing to flow.

Supply Chain Security

The power of this machinery comes from how it's systematically enforced through structural patterns rather than human diligence. This isn't CI/CD or DevOps—this is torque, constraint, runtime integrity. The implementation begins with a secure supply chain from source code to production artifacts.

Worker Chain

The udx/worker ecosystem provides the foundation for implementing these principles through a secure, auditable supply chain:

Source Code Repositories: The udx/worker GitHub repository contains the base implementation, with language-specific extensions like udx/worker-nodejs building upon this foundation. These repositories implement the core validation logic, secrets resolution, and runtime verification that enable cloud hopping capabilities.

Build Process: GitHub Actions workflows in these repositories handle automated testing, security scanning, and artifact creation. Each commit triggers comprehensive validation before artifacts are published, ensuring that only verified code reaches production environments.

Artifact Distribution: The validated containers are published to DockerHub as usabilitydynamics/udx-worker and usabilitydynamics/udx-worker-nodejs, providing ready-to-use base images that implement the framework's principles. These images serve as the foundation for application-specific containers.

Deployment Implementation: Organizations build their application containers on top of these base images, inheriting the security, validation, and cloud hopping capabilities without having to implement them from scratch.

This supply chain architecture ensures that security and validation are built into the foundation of every deployment, rather than added as an afterthought. By building on udx/worker or udx/worker-nodejs, teams immediately inherit the framework's capabilities while focusing on their application-specific requirements.

Let's explore the key mechanisms that make this approach effective:

Secrets Never in Code: All sensitive values are managed through secure reference patterns that point to external providers, implementing a military-grade approach to configuration security. Just as network architects separate control and data planes (OSI layers 3-4), secrets are separated from application code completely. Secrets are managed the same way critical signals in distributed military communications are treated: scoped, rotated, and resolved in real time. They are never written into the codebase. Applications reference what they need by name; values are resolved at runtime from sources like GCP Secret Manager, Azure Key Vault, or Bitwarden.

This approach transforms zero trust from a security initiative into a behavior model embedded in the machinery of every component. Applications do not run until they authenticate their context, validate their secrets, and confirm their runtime configuration. Containers do not trust the environment they run in; they verify it before starting. Trust is not presumed at boot—it is earned at runtime, and revoked if compromised. This creates machinery that is structurally paranoid and continuously self-governing—engineered deliberately, confirmed systematically, executed promptly.

Access is role-scoped and environment-specific, following the same security control principles that govern the broader architecture. The build system never sees what only the runtime should know. Credentials can change without restarting services. All access is logged, all resolution paths are structured. Secrets are treated as part of the runtime contract—not an afterthought. Even in disconnected edge deployments, secrets management remains robust and compliant, with URI-based patterns like gcp/secrets/KEY_NAME resolving through local credential stores.

Layered, Declarative Structure: The machinery follows a layered architecture: base container, worker framework, runtime environment, and application layer. Each component integrates precisely like a calibrated mechanism, governed by declarative configuration, with all operational expectations defined up front. No manual patching or ad hoc config changes permitted in production. This isn't ritual—it's fundamental law. This implements the industry best practice of strictly separating build, release, and run phases—creating machinery that's structured to refresh credentials automatically, detect configuration anomalies, and restore functionality during critical system collapse.

Pipeline certification converts DevOps steps into enforceable contracts. Certified pipelines defined by YAML do more than automate deployments—they encode survivability thresholds into enforceable contracts. Each pipeline stage (e.g., WAF application, AKS provisioning, secrets injection) is tied to template-driven gates that block execution unless all required conditions are met. This removes ambiguity from operational readiness. A system that reaches production has, by definition, passed all structural integrity gates. Failure to deploy becomes a protective mechanism, not a defect.

The system has been implemented with over 30 programming languages, with most enterprise teams building in C#, Java, and other industry-standard technologies. This flexibility enables organizations to standardize their deployment approach while supporting diverse technology stacks.

In this example, the application layer bootstraps from declarative YAML files in /var/www/.rabbit/*.yml, defining everything from routes to caching policies. This architecture guarantees that service behavior stems from configuration rather than hardcoded logic—a pattern replicated across all supported languages. Teams implementing these patterns should explore the user-centric software development guide for additional insights on structuring applications around these principles.

Runtime Validation and Enforcement: Every deployment begins with a comprehensive validation of its environment, similar to how network hardware performs power-on self-tests before joining the network. This approach embodies what Womack, Jones, and Roos (1990) identified as "jidoka" in Toyota's production system - the principle that systems must automatically detect abnormalities and stop themselves rather than allowing defects to propagate. Containers validate the presence and correctness of secrets, configuration, and required files before executing any application logic. If validation fails, execution is halted—no exceptions. Environments must be ephemeral, allowing developers to be able to spin up working clones of another.

A dedicated environment loader function reads definitions from /etc/worker/environment and dynamically translates external configuration into internal execution context as constants, environment variables, and server variables. This implements the core infrastructure principle of keeping configuration separate from code, ensuring that the same application binary can run in multiple environments without modification.

Separation of Duties and Versioned Change Control: All deployment logic, infrastructure definitions, and service configurations are version-controlled, treating infrastructure configuration with the same discipline traditionally applied only to application code. Approvals, audit trails, and change management are enforced as part of the operational workflow, not as an afterthought. This collaborative cadence approach ensures teams maintain velocity without sacrificing governance, as described in the user-centric software development guide.

No Drift, No Improvisation: The architecture eliminates configuration drift by enforcing all changes through versioned, reviewed, and tested manifests. Just as network configurations should be consistent across all devices in a production environment, this approach ensures development, staging, and production environments remain identical in structure. This aligns with what Kim et al. (2021) describe in The DevOps Handbook as "security shifting left," where "security gates become automated tests and policies" that are enforced continuously throughout the pipeline. Rollbacks, blue-green deployments, and event-driven automation are all governed by declarative patterns that persist even when networks don't. Organizations implementing these practices see significant improvements in compliance posture, as documented in the SOC2 compliance guide.

Multi-Cloud and Edge Resilience: The architecture operates across multi-cloud, hybrid, and disconnected environments, extending beyond basic portability into true infrastructure independence. All requirements for operation are declared and enforced locally, enabling nodes to function independently—even when upstream systems are unavailable. For sysadmins familiar with high-availability clustering, this approach functions similarly but at a much larger scale, with automatic validation built directly into each component.

This platform independence is a core architectural advantage—the same deployment works consistently whether on physical hardware, virtualized infrastructure, or across multiple cloud providers. Organizations can move from one environment to another without rewriting applications or deployment configurations. This isn't theoretical portability; it's operational reality that enables true cloud hopping capabilities, allowing you to shift environments faster than threats can target them.

This architecture is not just a collection of best practices—it is a rigorously enforced system that encodes modern cloud-native principles directly into infrastructure patterns, validated by real-world deployments across highly regulated, high-stakes environments. Its guarantees are structural, not aspirational, and every claim is supported by evidence in operational documentation. Organizations ranging from small teams to enterprise operations can implement these patterns using the cloud automation best practices.

Advanced Metrics

The software industry has embraced DORA metrics (deployment frequency, lead time, recovery time, and failure rate) as the standard for measuring DevOps performance. While these metrics provide valuable insights, they don't fully capture what matters in high-stakes environments where software must evolve through multiple refinement phases.

Teams implementing this repository-centric architecture consistently score in the top 3% on DORA metrics, but operational experience in contested environments reveals that additional measures are critical. Each validation phase carries different significance and requires more nuanced measurement approaches than standard industry metrics can provide.

This architectural approach extends beyond DORA with metrics that better reflect the multi-stage reality of enterprise deployment:

Validation Gate Efficacy: This measures how effectively validation gates prevent compromised deployments from reaching production, much like how network security appliances track blocked threats rather than just throughput. A properly configured system should reject incompatible changes early—preventing downstream costs. The metric isn't how quickly changes deploy, but how effectively bad changes are caught before causing damage.

Configuration Consistency Index: This tracks the variance between environments throughout the deployment pipeline. Lower variance indicates better architectural integrity and reduces production surprises. For sysadmins who've dealt with the "it works in dev but fails in prod" problem, this metric quantifies your success at eliminating that class of issues entirely.

Artifact Completeness: Measures whether deployment artifacts contain all required metadata, credentials references, and validation hooks. Complete artifacts enable automated verification at every stage, complementing DORA's failure rate by preventing failures before they occur. Think of this as measuring the quality of your deployment packages, not just how often they deploy.

Drift Resistance: Quantifies how well environments maintain their declared state over time. Repository-enforced configurations consistently score near-perfect resistance to unmanaged changes. This extends DORA's recovery time insight by preventing the configuration drift that often necessitates recovery in the first place. Sysadmins who've chased down unexpected environment changes will immediately understand the value here.

These architectural metrics don't replace DORA—they complement it by addressing the underlying structures that make DORA excellence possible in high-stakes environments. Traditional DevOps often achieves speed by sacrificing governance; this approach achieves both simultaneously through structural enforcement of proven cloud-native principles.

Organizations implementing this repository-centric architecture not only improve their DORA metrics but gain measurement systems that provide actionable insights across the entire deployment lifecycle—from development through testing, validation, production, and recovery scenarios.

This architectural approach scales across enterprises without creating new bottlenecks—enabling cloud hopping at massive scale while maintaining security and reliability.

Seamless Scaling

Most scaling efforts break down at central points of failure or assume everything stays stable. This approach takes a different direction: decentralization and autonomy. Each deployment validates itself, adapts, and scales without waiting for a control tower. Each team owns their software and controls the process of how it reaches their customers.

Mobile to Banking

The Mobile Credential project began as a Greenfield initiative that funded much of Transact's re-platforming efforts. As shown in the detailed case study video, this implementation demonstrates how cloud hopping principles enable resilient operations at enterprise scale. This initial implementation stands as a definitive case study in enterprise-scale deployment, enabling Transact Campus (formerly Blackboard) to overcome extraordinary scale requirements while meeting aggressive timelines:

- Zero-downtime deployment across multiple cloud regions

- Support for financial transactions at major institutions

- Seamless integration with physical access control systems

- Capacity to handle rapid adoption surges

As captured in the video interview with their development manager, this architecture became the cornerstone of Transact's market-leading platform. However, the framework's true versatility emerged when it evolved beyond Mobile Credential to power Transact IDX—a comprehensive cloud-based stored value solution launched in 2023 that functions essentially as an online bank. The platform maintains 100% uptime with sub-two-second transaction response times while processing $53 billion annually in campus transactions. Built on multi-region Azure architecture with AKS (Azure Kubernetes Service), Transact IDX manages more than 11 million meal plans while maintaining compliance with PCI-DSS, SOC 2 (Type II), and OWASP Top 10 security standards.

This evolution from access control to financial platform demonstrates how cloud hopping creates both a security advantage and unprecedented deployment versatility. The IDX platform manages financial transactions, meal plans, and stored value accounts for millions of students, processing payments, managing balances, handling reconciliation, and providing real-time financial reporting while maintaining compliance with financial regulations.

The same architectural principles that enabled secure access control now power banking-grade financial transactions with:

- Regulatory compliance at scale through automated validation gates

- Seamless integration with payment processors and financial institutions

- Cloud-native financial services transformed from traditional on-premise systems

- Real-time transaction processing with sub-two-second response times

The system can deploy complete infrastructure to any cloud provider in under 45 minutes—fast enough to maintain operational continuity even during active threats or regional outages. This capability was critical to Transact's expansion across educational, healthcare, and corporate sectors.

Every deployment is self-contained with its own validation checks. You can run 100, 1,000, or 10,000 deployments across clouds, teams, and zones—without a single control tower or centralized bottleneck.

Enterprise Deployment

At enterprise scale, consistency becomes the critical success factor. Our approach uses declarative manifests to ensure identical behavior across thousands of deployments without manual configuration or tribal knowledge:

The Kubernetes deployment manifest defines everything the container needs: mounted volumes for credentials, config files, certificates, and explicit environment variables. Nothing is assumed or inherited implicitly. Everything is declared.

This manifest-driven approach enables several scale advantages that traditional approaches can't match:

- Linear Scaling Operations: Traditional environments need 1 IT/SRE for every 3.3 developers, while this approach requires only 1 for every 8.5.

- Consistent Validation: Organizations typically need 1 QA Engineer for every 39 developers, but this approach requires only 1 for every 98.

Validation becomes a system property rather than a human responsibility, eliminating the bottleneck of manual reviews and approvals. - Auditable Artifacts: Standard enterprises need 1 Security Engineer for every 20 developers, but this approach needs only 1 for every 100.

- Automated Remediation: Traditional operations teams need 1 DevOps Engineer for every 7 developers, while this approach requires only 1 for every 30.

In practical terms: a 700-developer organization traditionally needs 362 operations staff. This approach frees up nearly 250 specialized technical roles for innovation rather than maintenance.

The most significant advantage comes from how operational knowledge accumulates within the delivery system itself rather than in documentation or team members' heads. When a new team adopts this pattern, they immediately inherit:

- Proven validation logic that prevents common failure modes

- Configuration templates tailored to their technology stack

- Security controls that satisfy enterprise requirements

- Observable deployment pipelines with consistent metrics

These inheritance characteristics explain why initial implementations take 4-6 weeks, but subsequent teams onboard in days. One enterprise organization demonstrated this by scaling from 1 team to 28 teams with identical deployment patterns in just 8 months.

Entrypoint scripts perform early validation by resolving secrets using secure URI patterns. The system implements a systematic verification process that loads configurations, exports variables, authenticates service actors, and fetches secrets - all with strict error handling. If any step fails, the container halts execution immediately, enforcing fail-fast behavior before problems cascade.

Organizational Transformation

As Conway's Law would predict, the integrated approach to software delivery leads to more integrated teams with shared responsibility for outcomes. This creates a virtuous cycle where improved deployment capability enhances organizational capability, which in turn drives further technical innovation.

The DevSecOps workflow creates a natural equilibrium between vision and operation, where balanced partnerships drive unified delivery:

- Product Owners define business requirements while SREs ensure those requirements operate reliably in production

- Scrum Masters shepherd the agile process while DevOps Engineers implement the technical pipeline

- Software Engineers write application code while Security Engineers ensure that code is protected

- Product Architects design the system structure while QA Engineers validate that structure works as intended

The workflow moves through three key phases:

- Make: Prioritizing user stories, designing architecture, developing code, and creating container images

- Refine: Monitoring performance, refactoring code, improving architecture, and running security tests

- Verify: Validating requirements, running acceptance and regression tests, and performing security checks

This approach supports and accelerates developer adoption and best practices:

- CLI tools and one-off jobs created by anyone

- Stateless APIs and microservices